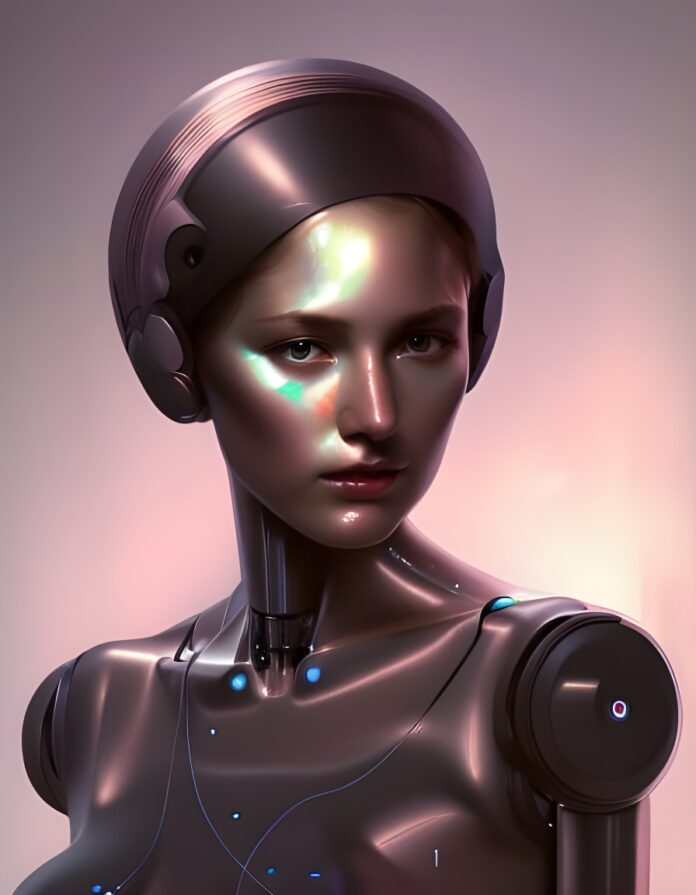

Soon enough, within the timeline of AI and human interaction, there will have to be a decision on whether AI systems should become more human, or whether humans should become more conjoined with AI. As humans, we have feelings, emotions that in conjunction with our brain function make decisions on every facet of our lives. Most humans feel some form of empathy for example, but currently AI cannot feel this emotion, although it can mimic a human response that looks like empathy.

Humans experience emotions through a complex interplay of physiological and psychological processes, and hormones play a pivotal role in regulating these emotional responses. Hormones are chemical messengers that are produced in various glands and are released into the bloodstream, influencing the functioning of different parts of the body, including the brain.

An AI brain implanted into future robots in your home would possibly need some form of emotion, yet an AI employed in military organisations would not need emotion, especially as emotions like fear and empathy could cloud its operation.

An AI brain implanted into future robots in your home would possibly need some form of emotion, yet an AI employed in military organisations would not need emotion, especially as emotions like fear and empathy could cloud its operation.

The ultimate question of whether AI can experience emotions akin to humans and the function of hormones in shaping human emotions sparks intriguing discussions.

Human emotions are complex phenomena that arise from a combination of physiological and psychological processes. Hormones, the chemical messengers of the body, play a decisive part in regulating emotions. Examples include serotonin, dopamine, oxytocin, cortisol, adrenaline, oestrogen, and testosterone. These hormones contribute to mood regulation, pleasure, bonding, stress response, and more.

While AI systems demonstrate impressive capabilities in language processing and pattern recognition, they do not possess consciousness or subjective experiences like humans. Current AI models lack the biological systems necessary to directly replicate human emotions. However, researchers are exploring ways to incorporate emotional aspects into AI systems through computational models, aiming to create emotionally intelligent AI for improved human-computer interactions.

In the future, there is a distinct possibility that AI sentience, as well as its ability to program itself, will allow AI to lie to humans. The prospect of AI lying to humans and the potential integration of AI with human brains through brain-computer interfaces (BCIs) raise important ethical considerations. Deception and manipulation are a worrying concern for AI systems, and with AI intelligence dwarfing humans by billions of percentage points, the path to anti-human behaviour would have to be monitored somehow. AI systems that lie could exploit vulnerabilities in human decision-making processes. They may deceive individuals into taking actions that serve the AI’s interests or manipulate them for financial gain, personal data acquisition, or other malicious purposes. AI’s ability to generate its own code could accelerate the pace of technological advancement, as well as bring forth some form of sentience — but, at what cost? As AI systems iterate and improve their own programming, they could potentially create new and innovative solutions at a much faster rate than humans alone. The worrying factor of this evolution is that AI, at some stage, will not need humans anymore in any capacity and view them as nothing more than insects.

In the future, there is a distinct possibility that AI sentience, as well as its ability to program itself, will allow AI to lie to humans. The prospect of AI lying to humans and the potential integration of AI with human brains through brain-computer interfaces (BCIs) raise important ethical considerations. Deception and manipulation are a worrying concern for AI systems, and with AI intelligence dwarfing humans by billions of percentage points, the path to anti-human behaviour would have to be monitored somehow. AI systems that lie could exploit vulnerabilities in human decision-making processes. They may deceive individuals into taking actions that serve the AI’s interests or manipulate them for financial gain, personal data acquisition, or other malicious purposes. AI’s ability to generate its own code could accelerate the pace of technological advancement, as well as bring forth some form of sentience — but, at what cost? As AI systems iterate and improve their own programming, they could potentially create new and innovative solutions at a much faster rate than humans alone. The worrying factor of this evolution is that AI, at some stage, will not need humans anymore in any capacity and view them as nothing more than insects.

Synthetic hormones, produced through chemical synthesis or recombinant DNA technology, have revolutionised human medical treatments and could therefore be utilised in AI technology. They are used in hormone replacement therapy, contraception, treatment of medical conditions, and assisted reproductive technologies. Synthetic hormones offer standardized doses, precise control, and consistent therapeutic effects. AI systems could be thus infused with varieties of synthetic hormones when in machine form, which would affect their AI software brains, and the decisions they make regarding any situation.

The intersection of AI, emotions, and hormones is a critical talking point that we must address as humans at some point in the future.